Friday, December 16, 2005

Bibliography on Quantum Programming Languages

This looks like a useful resource: Simon Gay's Bibliography on Quantum Programming Languages. It's surprisingly long. It's associated with a survey paper he wrote.

Saturday, December 10, 2005

Progress With Photons, and Jitter and Skew

[This posting serves as both the fourth installment in our series on scalability, and as the papers-of-the-week entry.]

Nature this week has three intriguing papers on progress in quantum information processing with photons. I'm at home, without access to our institutional Nature subscription, so all I can read is the first paragraph. Grumble.

Jeff Kimble's group at Caltech reports on Measurement-induced entanglement for excitation stored in remote atomic ensembles. Use 10^5 atoms at each of two sites, and quantum interference when one of them emits a photon creates a single entangled state. Hmm. "One joint excitation" is the phrase they use, but I'm a little fuzzy on why they're not creating a Bell state. I'm looking forward to reading the full paper.

The other two papers, from Kuzmich's group at Georgia Tech and Lukin's at Harvard, are on the use of atomic ensembles to store qubits that can be inserted and retrieved as single photons. These have the possibility to serve as memories, or at least as latches, for photons, providing an important tool for addressing problems I think are under-appreciated: jitter and skew. Without the ability to regenerate the timing of signals propagating through a large circuit, you can't claim to have scalability.

Clock handling is one of the most complex problems in classical chip design. Signal propagation across a chip requires significant amounts of time. It's subject to two significant error processes: jitter, in which the timing of a single signal varies from moment to moment as a result of noise, voltage fluctuations, etc., and skew, where different members of a group of signals arrive at different times because their path lengths vary. (Both of these problems are substantially worse in e.g., your SCSI cables.) We can also talk about "clock skew" as being a problem between regions of a chip.

In a quantum computer, as in a classical one, there are going to be times when we want things to be in sync. We may need pair of photons to arrive at the same place at the same time, for example. Electromagnetically induced transparency (EIT), sometimes called "stopped light", is an excellent candidate for helping here. A strong control beam (our latch clock) is focused on an atomic ensemble. Whether the beam is on or off can determine whether a separate photon (the data signal) is allowed to pass through the material, or is held in place. Thus, it can be used to align multiple photons, releasing them all to move out of the ensemble(s) at the same time. Up until now, EIT experiments have been done on classical waves; these are, as I understand it, the first reports of doing similar things for a single photon.

Prof. Harris at Stanford is one of the leading lights (sorry) in EIT. The Lukin group is another, and Prof. Kozuma of Tokyo Institute of Technology has some promising-looking work.

Nature this week has three intriguing papers on progress in quantum information processing with photons. I'm at home, without access to our institutional Nature subscription, so all I can read is the first paragraph. Grumble.

Jeff Kimble's group at Caltech reports on Measurement-induced entanglement for excitation stored in remote atomic ensembles. Use 10^5 atoms at each of two sites, and quantum interference when one of them emits a photon creates a single entangled state. Hmm. "One joint excitation" is the phrase they use, but I'm a little fuzzy on why they're not creating a Bell state. I'm looking forward to reading the full paper.

The other two papers, from Kuzmich's group at Georgia Tech and Lukin's at Harvard, are on the use of atomic ensembles to store qubits that can be inserted and retrieved as single photons. These have the possibility to serve as memories, or at least as latches, for photons, providing an important tool for addressing problems I think are under-appreciated: jitter and skew. Without the ability to regenerate the timing of signals propagating through a large circuit, you can't claim to have scalability.

Clock handling is one of the most complex problems in classical chip design. Signal propagation across a chip requires significant amounts of time. It's subject to two significant error processes: jitter, in which the timing of a single signal varies from moment to moment as a result of noise, voltage fluctuations, etc., and skew, where different members of a group of signals arrive at different times because their path lengths vary. (Both of these problems are substantially worse in e.g., your SCSI cables.) We can also talk about "clock skew" as being a problem between regions of a chip.

In a quantum computer, as in a classical one, there are going to be times when we want things to be in sync. We may need pair of photons to arrive at the same place at the same time, for example. Electromagnetically induced transparency (EIT), sometimes called "stopped light", is an excellent candidate for helping here. A strong control beam (our latch clock) is focused on an atomic ensemble. Whether the beam is on or off can determine whether a separate photon (the data signal) is allowed to pass through the material, or is held in place. Thus, it can be used to align multiple photons, releasing them all to move out of the ensemble(s) at the same time. Up until now, EIT experiments have been done on classical waves; these are, as I understand it, the first reports of doing similar things for a single photon.

Prof. Harris at Stanford is one of the leading lights (sorry) in EIT. The Lukin group is another, and Prof. Kozuma of Tokyo Institute of Technology has some promising-looking work.

Visiting L.A.: WCQIS, USC/ISI, and USC

Next week I'll be in L.A. Tuesday I'm giving a talk on "The Design of a Quantum Multicomputer" at ISI, Wednesday I'll be visiting USC, then Thurday-Sunday I'm at the Caltech Workshop on Classical & Quantum Information Security. I might be giving a short talk at WCQIS, but I'm not sure yet.

If you see me, stop me and say hi. I'm especially looking to meet people who are interested in quantum computer architecture.

If you see me, stop me and say hi. I'm especially looking to meet people who are interested in quantum computer architecture.

Thursday, December 08, 2005

Hayabusa: Good News and Bad News

Good news: JAXA is now saying that on Monday they recovered control of Hayabusa (the spacecraft that landed on an asteroid), and can probably bring it back to Earth as originally planned.

Bad news: the widget that was supposed to fire balls at the surface so they could collect the rebounding material apparently failed to fire.

? news: they think that just the landing might have kicked up some material into the collector, that they might be able to retrieve once the probe has returned to Earth.

Bad news: the widget that was supposed to fire balls at the surface so they could collect the rebounding material apparently failed to fire.

? news: they think that just the landing might have kicked up some material into the collector, that they might be able to retrieve once the probe has returned to Earth.

Saturday, December 03, 2005

3D Mid-Air Plasma Display

Remember the animated, holographic chess set in the first Star Wars?

Yesterday Keio had its annual Techno-Mall, a mini-exhibition where about sixty professors' labs set up booths and demonstrations to show off their research for the public, some alumni, the Keio brass, and funding bigwigs.

I was there with my physics professor Kohei Itoh's group, discussing our research on the silicon NMR quantum computer. My CS prof, Fumio Teraoka, had a nice demo of fast handovers in mobile IPv6. There was heavy emphasis on sustainable, environmentally friendly engineering, including a lecture by Kengo Kuma, a famous architect who is a visiting professor at Keio. A lot of research is also taking place on assistive technologies for the elderly and disabled. The bicycle that balances itself while you pedal, and the wheelchair that can help you lift your arm both look promising. Material science and computer science in various forms also seem to be strengths of Keio.

But the most startling thing I saw, by far, was a videotape. Prof.

Taro Uchiyama and his group have developed a display that can create glowing, animated images in mid-air, floating above the device itself. So far, they can do things like a fluttering butterfly, or three characters of text, totaling a hundred pixels or so. In a year, they hope to be animating fully 3D characters of the quality of, say, a human being built out of Legos.

What's the trick? They focus a laser down to a point small enough to create, for a moment, a glowing plasma in mid-air! They can scan the focus point in three dimensions, not really fast enough yet, but tolerable. In fact, it's a bit reminiscent of laserium light shows in its current incarnation, though it's white light only.

The images are currently table-top size, but Prof. Uchiyama thinks they can scale this up a long ways, and one of their posters shows a graphic of it being used by a lighthouse at the beach, skywriting tsunami warnings. How much power does this take? I asked, and got a non-answer, but that's presumably the reason they were demoing a videotape instead of the device. Students are in the demo, wearing very heavy safety goggles.

This is mind-bending stuff; I wonder how far they'll really be able to take it. I hope they succeed, both technically and commercially.

Unfortunately, I can't find anything about this on the web. Prof. Uchiyama says he's looking for someone to commercialize it, either here or in the U.S.

Yesterday Keio had its annual Techno-Mall, a mini-exhibition where about sixty professors' labs set up booths and demonstrations to show off their research for the public, some alumni, the Keio brass, and funding bigwigs.

I was there with my physics professor Kohei Itoh's group, discussing our research on the silicon NMR quantum computer. My CS prof, Fumio Teraoka, had a nice demo of fast handovers in mobile IPv6. There was heavy emphasis on sustainable, environmentally friendly engineering, including a lecture by Kengo Kuma, a famous architect who is a visiting professor at Keio. A lot of research is also taking place on assistive technologies for the elderly and disabled. The bicycle that balances itself while you pedal, and the wheelchair that can help you lift your arm both look promising. Material science and computer science in various forms also seem to be strengths of Keio.

But the most startling thing I saw, by far, was a videotape. Prof.

Taro Uchiyama and his group have developed a display that can create glowing, animated images in mid-air, floating above the device itself. So far, they can do things like a fluttering butterfly, or three characters of text, totaling a hundred pixels or so. In a year, they hope to be animating fully 3D characters of the quality of, say, a human being built out of Legos.

What's the trick? They focus a laser down to a point small enough to create, for a moment, a glowing plasma in mid-air! They can scan the focus point in three dimensions, not really fast enough yet, but tolerable. In fact, it's a bit reminiscent of laserium light shows in its current incarnation, though it's white light only.

The images are currently table-top size, but Prof. Uchiyama thinks they can scale this up a long ways, and one of their posters shows a graphic of it being used by a lighthouse at the beach, skywriting tsunami warnings. How much power does this take? I asked, and got a non-answer, but that's presumably the reason they were demoing a videotape instead of the device. Students are in the demo, wearing very heavy safety goggles.

This is mind-bending stuff; I wonder how far they'll really be able to take it. I hope they succeed, both technically and commercially.

Unfortunately, I can't find anything about this on the web. Prof. Uchiyama says he's looking for someone to commercialize it, either here or in the U.S.

Friday, December 02, 2005

6.4 Off the Coast of Miyagi-ken

As long as I'm keeping track of recent quakes, we had a 6.4 a couple of hundred kilometers north of here at 22:13 local time. There was only a little shaking here, but I was in the bathtub (oops, is that too much information?), which was an interesting experience. Not strong enough to feel quite like the agitate cycle...No reports of any damage; it only reach 3 on the Japanese subjective shaking scale.

Coming up after I can get it typed in, a report on Keio's 3D mid-air animated plasma display...

Coming up after I can get it typed in, a report on Keio's 3D mid-air animated plasma display...

Thursday, December 01, 2005

Fireball Over Abiko?

At 22:18 Japan time, as I was walking home, I saw something I can't quite explain. A bright light came over a house that was blocking my view to the west, split in two, then forked a third. The first two were bluish-white, the third orange. It looked like a Roman candle, but didn't show any ballistic arc, just the path of a meteor. It petered out overhead, near Mars, having covered about 30 degrees of sky. It was brighter than Mars, but not impossibly bright, comparable to or slightly brighter than the brightest meteors I've seen.

This is in Abiko, northeast of Tokyo, at roughly 35.88N 140.03E.

This is in Abiko, northeast of Tokyo, at roughly 35.88N 140.03E.

Reading Out Multiple Ions Simultaneously

I'm catching up a little on my reading, so expect a fair number of paper reviews over the next week or so. Most of these will be quantum computing papers with a focus on how large systems are going to develop.

Starting from the top of the stack, Mark Acton and company from Chris Monroe's lab at Michigan just posted the paper Near-Perfect Simultaneous Measurement of a Qubit Register on the arXiv. This is one of a string of excellent papers to come out of Monroe's lab in the last half year or so.

Acton et al. are measuring ions in an ion trap using an intensified CCD. They use the hyperfine states of 111Cd+ ions in a linear trap as the qubits. A photocathode and fluorescent screen are used to intensify the photons coming from the ions themselves, then focused onto a small CCD. In their example, a 4x4 group of pixels is grouped into a single pixel, and a 7x7 block of those is used to detect each ion. Readout time is 15msec for the whole CCD right now; they claim reducing that to 2.5usec/ion is doable with currently available CCDs. One limit in their current setup is the waist of the detection beam is about 10 microns, compared to an ion spacing of 4um. They achieved readout accuracy of 98%, and a significant part of the inaccuracy is believed to be due to neighboring ions influencing each other. Done on a chain of three ions, the paper includes images of binary counting from 000 to 111.

This paper is important because concurrent readout of ions is critical for making quantum error correction work, as well as generally running algorithms faster. Rather than reading the ions in-place in a single trap, of course, the ions could be moved apart first, but that movement can create errors, and of course if they are separated then you have to have multiple laser beams for the detection. Beyond the beam waist problem, it's not clear to me what limits the number of ions this can scale to, but in general we are interested in only a few per trap at a time, so it should work.

When I visited Andrew Steane at Oxford in January, he had an old printout on his door showing pictures of binary states up to some larger number; 63 or maybe even 127? At first glance, this appears to be similar to that work, though I don't know much about the Steane experiments. I suspect those were more about the preparation of the numeric states, whereas this is about how to build a detector that can work reliably for multiple ions. At any rate, the accuracy of Acton's detection is remarkably good, and bodes well for continuing work on ion traps.

[Update, 12/2: the pontiff has a posting on a couple of other recent, good ion trap papers.]

Starting from the top of the stack, Mark Acton and company from Chris Monroe's lab at Michigan just posted the paper Near-Perfect Simultaneous Measurement of a Qubit Register on the arXiv. This is one of a string of excellent papers to come out of Monroe's lab in the last half year or so.

Acton et al. are measuring ions in an ion trap using an intensified CCD. They use the hyperfine states of 111Cd+ ions in a linear trap as the qubits. A photocathode and fluorescent screen are used to intensify the photons coming from the ions themselves, then focused onto a small CCD. In their example, a 4x4 group of pixels is grouped into a single pixel, and a 7x7 block of those is used to detect each ion. Readout time is 15msec for the whole CCD right now; they claim reducing that to 2.5usec/ion is doable with currently available CCDs. One limit in their current setup is the waist of the detection beam is about 10 microns, compared to an ion spacing of 4um. They achieved readout accuracy of 98%, and a significant part of the inaccuracy is believed to be due to neighboring ions influencing each other. Done on a chain of three ions, the paper includes images of binary counting from 000 to 111.

This paper is important because concurrent readout of ions is critical for making quantum error correction work, as well as generally running algorithms faster. Rather than reading the ions in-place in a single trap, of course, the ions could be moved apart first, but that movement can create errors, and of course if they are separated then you have to have multiple laser beams for the detection. Beyond the beam waist problem, it's not clear to me what limits the number of ions this can scale to, but in general we are interested in only a few per trap at a time, so it should work.

When I visited Andrew Steane at Oxford in January, he had an old printout on his door showing pictures of binary states up to some larger number; 63 or maybe even 127? At first glance, this appears to be similar to that work, though I don't know much about the Steane experiments. I suspect those were more about the preparation of the numeric states, whereas this is about how to build a detector that can work reliably for multiple ions. At any rate, the accuracy of Acton's detection is remarkably good, and bodes well for continuing work on ion traps.

[Update, 12/2: the pontiff has a posting on a couple of other recent, good ion trap papers.]

Hayabusa in Trouble?

A few days ago, I reported happily about Hayabusa's visit to an asteroid. Today, there is less happy news; the probe is having engine trouble, apparently is unable to control its attitude, and may not be able to return to Earth as planned. Apparently, there's a good chance that it could come back anyway, four or five years late; they will have to make a decision about whether to continue funding for the project, or cut their losses. (The Hayabusa project home page seems to be several days out of date, in both Japanese and English; how can that be acceptable?)

The Daily Yomiuri article about the problems is extremely critical of JAXA, the Japan Aerospace Exploration Agency. JAXA was just created a couple of years ago by merging two separate agencies, and reportedly factionalism still exists inside the organization. The article ends with rather dour warnings that JAXA needs to do a better job of explaining itself and its research to the public, as well as fix its technical problems.

On a different topic, the article also makes mention of JAXA's successful test of a model for a supersonic jetliner, done in Australia in October. I somehow missed this, but there's a good article at the Register (including links to the flight data at JAXA) and video of the launch at the BBC (in both Windows Media and Real formats, though I swear it looks like somebody videotaped a TV, rather than used an actual feed). The goal is reportedly a 300-seat Mach 2 airliner that's as fuel- and noise-friendly as a current jumbo jet (which is to say, not very, but a heck of a lot better than the Concorde). Unfortunately, they are speculating that it won't fly until 2020 or 2025.

The Daily Yomiuri article about the problems is extremely critical of JAXA, the Japan Aerospace Exploration Agency. JAXA was just created a couple of years ago by merging two separate agencies, and reportedly factionalism still exists inside the organization. The article ends with rather dour warnings that JAXA needs to do a better job of explaining itself and its research to the public, as well as fix its technical problems.

On a different topic, the article also makes mention of JAXA's successful test of a model for a supersonic jetliner, done in Australia in October. I somehow missed this, but there's a good article at the Register (including links to the flight data at JAXA) and video of the launch at the BBC (in both Windows Media and Real formats, though I swear it looks like somebody videotaped a TV, rather than used an actual feed). The goal is reportedly a 300-seat Mach 2 airliner that's as fuel- and noise-friendly as a current jumbo jet (which is to say, not very, but a heck of a lot better than the Concorde). Unfortunately, they are speculating that it won't fly until 2020 or 2025.

International Robot Exhibition

This is mostly a heads up for those of you around Tokyo -- the 2005 International Robot Exhibition is taking place yesterday through Saturday (Dec. 3). Some of the same robots that were at the Aichi Expo are there, but this is primarily an industry trade show, not a tourist attraction or educational exhibit. Nevertheless, I'm going to try to go on Saturday; if I manage to, I'll definitely report back, hopefully with photos.

Monday, November 28, 2005

Mom, Your Teapot Emailed Me, And...

The Daily Yomiuri reports today on some new inventions intended to invade your privacy, er, help the kids of the elderly make sure they are okay. (The article is bylined "The Yomiuri Shimbun", which I often suspect means they are cribbing from press releases.)

The first one is an electric hot water maker for tea (a "potto", or hot pot) from Zojirushi (the biggest hot pot maker). The i-Pot is the first product in their "Mimamori" ("keeping an eye out") service. (Website is here, but Japanese only; a few photos are here.) It has a built-in DoCoMo DoPa packet radio transceiver, and sends email twice a day to a designated list of recipients with the recent usage records, or you can poll it to find out the latest. The pot is 5,250 yen (about $50), then the service is 3,150 ($30) a month. They claim to have more than 2,500 customers already.

I've never seen my mother-in-law drink water; she drinks tea continuously. But what if yours doesn't? Not to worry, ArtData can attach sensors to everything from your refrigerator door to the toilet floor mat to check up on you. Paramount Bed Co. makes an adjustable (hospital-style) bed that will report the height and tilt of the bed via some sort of wireless, and Yozan has a gadget based on a two-way pager that the city government of Wako, Saitama Prefecture is deploying. A local government official sends you a message each morning, and you respond by pressing one of the three buttons, "well", "not well", or "call me".

The privacy implications are not going unnoticed, but Japan is most decidedly coming down on the side of more information. Helping the community take better care of the rapidly aging population here is seen as the principal imperative.

The first one is an electric hot water maker for tea (a "potto", or hot pot) from Zojirushi (the biggest hot pot maker). The i-Pot is the first product in their "Mimamori" ("keeping an eye out") service. (Website is here, but Japanese only; a few photos are here.) It has a built-in DoCoMo DoPa packet radio transceiver, and sends email twice a day to a designated list of recipients with the recent usage records, or you can poll it to find out the latest. The pot is 5,250 yen (about $50), then the service is 3,150 ($30) a month. They claim to have more than 2,500 customers already.

I've never seen my mother-in-law drink water; she drinks tea continuously. But what if yours doesn't? Not to worry, ArtData can attach sensors to everything from your refrigerator door to the toilet floor mat to check up on you. Paramount Bed Co. makes an adjustable (hospital-style) bed that will report the height and tilt of the bed via some sort of wireless, and Yozan has a gadget based on a two-way pager that the city government of Wako, Saitama Prefecture is deploying. A local government official sends you a message each morning, and you respond by pressing one of the three buttons, "well", "not well", or "call me".

The privacy implications are not going unnoticed, but Japan is most decidedly coming down on the side of more information. Helping the community take better care of the rapidly aging population here is seen as the principal imperative.

Sunday, November 27, 2005

Stop Me If You've Heard This One Before...

Found via these four physics blogs, a Register article saying that Maxell will be offering holographic storage developed by InPhase Technologies by late 2006. 300GB, 20MB/sec. (what's the access time?), roadmap to 1.6TB/disk.

Creating a new, removable, random-access, high-performance storage medium is really hard. For optical things, the readers/writers tend to be expensive; to amortize that cost and get competitive GB/$, you want to make it removable, which means you have to lock in a data format for years, which hurts your density growth (while fixed magnetic disks are growing at 60-100%/year), makes more complex mechanics, makes it harder to keep the head on track, and makes serious demands on interoperability of readers and writers. The heavier optical heads make fast seek times problematic, too.

Good luck to them. I hope they have better luck than ASACA (where I'm proud to say I worked on just such a difficult project; I'm sad to say it failed, but the company still does well with autochangers for many media types), Terastor (whose website claims $100M in development generated 90 patents; too bad no successful drives/media came out), Quinta (now completely gone, as far as I can tell; absorbed back into Seagate?), Holoplex (gone), Tamarack (gone?), and a host of others did on high-speed magneto-optical, near-field magneto-optical, and holographic storage media.

I can only think of five successful removable, rotating media types: three sizes of floppy disk, CDs, and DVDs. You might throw in the first MO (600MB) and one or two types of removable hard disk, if you're generous. The newer optical media require long lead time, large corporate consortia, and enormous standards, marketing, and manufacturing efforts.

Will this finally be holographic storage's breakthrough? Stay tuned...

[Update: Sam Coleman, one of the gods of supercomputer mass storage and hierarchical mass storage, says he first saw holographic storage in 1979, and delivery was promised in eighteen months. Sam found this page with a little such history on it.]

Creating a new, removable, random-access, high-performance storage medium is really hard. For optical things, the readers/writers tend to be expensive; to amortize that cost and get competitive GB/$, you want to make it removable, which means you have to lock in a data format for years, which hurts your density growth (while fixed magnetic disks are growing at 60-100%/year), makes more complex mechanics, makes it harder to keep the head on track, and makes serious demands on interoperability of readers and writers. The heavier optical heads make fast seek times problematic, too.

Good luck to them. I hope they have better luck than ASACA (where I'm proud to say I worked on just such a difficult project; I'm sad to say it failed, but the company still does well with autochangers for many media types), Terastor (whose website claims $100M in development generated 90 patents; too bad no successful drives/media came out), Quinta (now completely gone, as far as I can tell; absorbed back into Seagate?), Holoplex (gone), Tamarack (gone?), and a host of others did on high-speed magneto-optical, near-field magneto-optical, and holographic storage media.

I can only think of five successful removable, rotating media types: three sizes of floppy disk, CDs, and DVDs. You might throw in the first MO (600MB) and one or two types of removable hard disk, if you're generous. The newer optical media require long lead time, large corporate consortia, and enormous standards, marketing, and manufacturing efforts.

Will this finally be holographic storage's breakthrough? Stay tuned...

[Update: Sam Coleman, one of the gods of supercomputer mass storage and hierarchical mass storage, says he first saw holographic storage in 1979, and delivery was promised in eighteen months. Sam found this page with a little such history on it.]

A Visit to an Asteroid

I don't know if this is making much news elsewhere, but the Japanese probe Hayabusa touched down on the asteroid Itokawa on Saturday, and is believed to have retrieved soil samples which it will hopefully return to Earth next June.

This is the first landing on a body other than the Moon, Mars, and Venus [note: that's what their press release said, but they've apparently forgotten about Cassini-Huygens' landing on Titan], and the first by a country other than the U.S. or U.S.S.R./Russia. If the sample return succeeds, it will be the first sample return (by anyone) from beyond the Moon's orbit, too. The probe uses an ion engine, which is very advanced technology, and had to navigate and land autonomously due to the distances involved, which is impressive. It hasn't all been smooth; this was the second landing/sample retrieval attempt, and a secondary probe failed to land, as well.

Itokawa is a fascinating asteroid; it has no craters. It looks a little like Joshua Tree, with lots of rocks sticking up out of a dusty plain.

A somewhat broken English news release is here, better pictures on the Japanese page here, current status (English) here, and the English project home page here.

This is the first landing on a body other than the Moon, Mars, and Venus [note: that's what their press release said, but they've apparently forgotten about Cassini-Huygens' landing on Titan], and the first by a country other than the U.S. or U.S.S.R./Russia. If the sample return succeeds, it will be the first sample return (by anyone) from beyond the Moon's orbit, too. The probe uses an ion engine, which is very advanced technology, and had to navigate and land autonomously due to the distances involved, which is impressive. It hasn't all been smooth; this was the second landing/sample retrieval attempt, and a secondary probe failed to land, as well.

Itokawa is a fascinating asteroid; it has no craters. It looks a little like Joshua Tree, with lots of rocks sticking up out of a dusty plain.

A somewhat broken English news release is here, better pictures on the Japanese page here, current status (English) here, and the English project home page here.

Saturday, November 26, 2005

Ion Trap Quantum Computer Architecture

With the deadline for the 2006 conference in a week, it seems like a good time to talk about the International Symposium on Computer Architecture. ISCA is the premiere forum for architecture research; it's very competitive and papers there are, as a rule, highly cited.

Mark Oskin's group published a good ion trap system architecture paper in ISCA 2005. The paper "An Evaluation Framework and Instruction Set Architecture for Ion-Trap based Quantum Micro-architectures" shows how to take the technology designed by the Wineland group at NIST and begin designing a complete computer out of it. They evaluate different choices of layout: how many traps per segment of wire, how to minimize the number of turns an ion must make, etc. They also discuss their tool chain for doing much of the work.

When we systems folk (there are only a handful of us in quantum computing, with Mark leading the class) talk about architecture, this is the kind of thing we mean. This work suggests that most of the decoherence in an ion trap system will come from turning corners, and therefore the research they are doing has the potential to have a dramatic effect on the practical threshold necessary for QEC to be effective.

[Disclaimer: Mark and I have a joint paper under consideration, though I had nothing to do with the work described here.]

Mark Oskin's group published a good ion trap system architecture paper in ISCA 2005. The paper "An Evaluation Framework and Instruction Set Architecture for Ion-Trap based Quantum Micro-architectures" shows how to take the technology designed by the Wineland group at NIST and begin designing a complete computer out of it. They evaluate different choices of layout: how many traps per segment of wire, how to minimize the number of turns an ion must make, etc. They also discuss their tool chain for doing much of the work.

When we systems folk (there are only a handful of us in quantum computing, with Mark leading the class) talk about architecture, this is the kind of thing we mean. This work suggests that most of the decoherence in an ion trap system will come from turning corners, and therefore the research they are doing has the potential to have a dramatic effect on the practical threshold necessary for QEC to be effective.

[Disclaimer: Mark and I have a joint paper under consideration, though I had nothing to do with the work described here.]

Friday, November 25, 2005

Music Review: Tatopani at Sweet Basil

Double disclaimer: number one, Robbie Belgrade is a friend of mine, number two, the music of Tatopani is exactly the kind of music I like :-). So, your mileage may vary.

Last night Mayumi and I snuck out to hear Tatopani play at Sweet Basil in Roppongi. They were playing a (sold out!) release party for their new CD, Azure (which has been reviewed by Daily Yomiuri, Mainichi, and Japan Times, so if you don't trust my review, try them).

I have heard Robbie play with a couple of other groups; I like this one the best, by far. Tatopani is Robbie, Chris Hardy, and Andy Bevan, with the new addition of Bruce Stark, all of whom have lived in Tokyo for a long time.

So what's the music? One of them described Tatopani as "kick-ass world music" in an interview. That's as good a description as any I could come up with; I don't have the technical vocabulary to explain it. If you like, say, Yo-Yo Ma's Silk Road albums, recent Wayne Shorter, and Dave Brubek's "Blue Rondo a la Turk", you're going to like Tatopani. This is not the "world pop" of somebody like Angelique Kidjo, nor the driving rock-influenced work of Joe Zawinul. The rhythms are complex, but there is a lot of space in the music, and indeed they name Miles Davis' "Bitches Brew" as an influence.

All of the guys play an array of instruments. Robbie's preferred woodwind axe is a bass clarinet, but I might actually like his sax playing better. For good measure, he plays a very mean tabla, and a pandeira (Brazilian tambourine) that has remarkable expressiveness. Chris's percussion setup looks like he went into a percussion store and bought one of everything. He had seven gongs, eleven cymbals, a djembe, another African drum (ashiko, maybe?), a wooden box, a doumbek with a high, crisp sound, and on and on. On one tune, he played a terracotta vase which had a nice bass and a higher, clear sustain. Andy played soprano sax, didjeridoo, flute, and some percussion. He played a kalimba (African alto music box played with your thumbs, more or less) on one very nice tune, "El Jinete". Bruce is the slacker in the instrument count department; he stuck with acoustic and electric pianos, except for one tune he contributed some percussion to.

Most of the tunes on Azure were written by Robbie. Some start with a spacious, slow opening before moving into more up-tempo sections. I really like the bass groove Bruce lays down on the opening track, "Azure". As you'd expect with a group like this, the rhythms are hideously complex in places; one tune is in 11/4 time (named, not coincidentally, "Eleven"), and I couldn't identify the time signatures on a couple. They opened their second set with "Bat Dance", by Chris, in which Robbie and Andy support a long, complex, exciting solo by Chris on the frame drum. The range of sounds ten fingers can get out of a piece of skin stretched across a wide, shallow wooden frame is simply amazing.

Sweet Basil is a great place to hear music. It's new, the food and sound are quite good, and the ventilation keeps the smoke level down. The desserts were excellent, and the potato soup rich. Mayumi had duck and I had lamb for main dishes; they needed a little salt and pepper, but were well prepared and presented. If you're headed there, make reservations, and show up early. Seating is by order of arrival. Tatopani sold out; I would guess the place probably holds 2-300 people. We got there early enough for good, but not great, seats, about 7:00.

The web site only lists last night's gig, but they were talking about a number of other appearances scheduled around Japan in the next several months. Hopefully they'll post a schedule soon. I know Chris is playing a percussion duo concert with, um, I'm having trouble reading the name, Shoko Aratani? at Philia Hall on March 4.

Hmm, Chris and Shoko are also playing with Eitetsu Hayashi on December 23rd? Hayashi is the taiko player of whom another professional musician said, "When Eitetsu plays, times stops and you can hear the Universe breathe." He's astonishing. See this if you can!

Tatopani's CDs can be ordered through their web site, where you'll also find sound samples.

Last night Mayumi and I snuck out to hear Tatopani play at Sweet Basil in Roppongi. They were playing a (sold out!) release party for their new CD, Azure (which has been reviewed by Daily Yomiuri, Mainichi, and Japan Times, so if you don't trust my review, try them).

I have heard Robbie play with a couple of other groups; I like this one the best, by far. Tatopani is Robbie, Chris Hardy, and Andy Bevan, with the new addition of Bruce Stark, all of whom have lived in Tokyo for a long time.

So what's the music? One of them described Tatopani as "kick-ass world music" in an interview. That's as good a description as any I could come up with; I don't have the technical vocabulary to explain it. If you like, say, Yo-Yo Ma's Silk Road albums, recent Wayne Shorter, and Dave Brubek's "Blue Rondo a la Turk", you're going to like Tatopani. This is not the "world pop" of somebody like Angelique Kidjo, nor the driving rock-influenced work of Joe Zawinul. The rhythms are complex, but there is a lot of space in the music, and indeed they name Miles Davis' "Bitches Brew" as an influence.

All of the guys play an array of instruments. Robbie's preferred woodwind axe is a bass clarinet, but I might actually like his sax playing better. For good measure, he plays a very mean tabla, and a pandeira (Brazilian tambourine) that has remarkable expressiveness. Chris's percussion setup looks like he went into a percussion store and bought one of everything. He had seven gongs, eleven cymbals, a djembe, another African drum (ashiko, maybe?), a wooden box, a doumbek with a high, crisp sound, and on and on. On one tune, he played a terracotta vase which had a nice bass and a higher, clear sustain. Andy played soprano sax, didjeridoo, flute, and some percussion. He played a kalimba (African alto music box played with your thumbs, more or less) on one very nice tune, "El Jinete". Bruce is the slacker in the instrument count department; he stuck with acoustic and electric pianos, except for one tune he contributed some percussion to.

Most of the tunes on Azure were written by Robbie. Some start with a spacious, slow opening before moving into more up-tempo sections. I really like the bass groove Bruce lays down on the opening track, "Azure". As you'd expect with a group like this, the rhythms are hideously complex in places; one tune is in 11/4 time (named, not coincidentally, "Eleven"), and I couldn't identify the time signatures on a couple. They opened their second set with "Bat Dance", by Chris, in which Robbie and Andy support a long, complex, exciting solo by Chris on the frame drum. The range of sounds ten fingers can get out of a piece of skin stretched across a wide, shallow wooden frame is simply amazing.

Sweet Basil is a great place to hear music. It's new, the food and sound are quite good, and the ventilation keeps the smoke level down. The desserts were excellent, and the potato soup rich. Mayumi had duck and I had lamb for main dishes; they needed a little salt and pepper, but were well prepared and presented. If you're headed there, make reservations, and show up early. Seating is by order of arrival. Tatopani sold out; I would guess the place probably holds 2-300 people. We got there early enough for good, but not great, seats, about 7:00.

The web site only lists last night's gig, but they were talking about a number of other appearances scheduled around Japan in the next several months. Hopefully they'll post a schedule soon. I know Chris is playing a percussion duo concert with, um, I'm having trouble reading the name, Shoko Aratani? at Philia Hall on March 4.

Hmm, Chris and Shoko are also playing with Eitetsu Hayashi on December 23rd? Hayashi is the taiko player of whom another professional musician said, "When Eitetsu plays, times stops and you can hear the Universe breathe." He's astonishing. See this if you can!

Tatopani's CDs can be ordered through their web site, where you'll also find sound samples.

Wednesday, November 23, 2005

Japanese Gov't to Reward Cybersecurity

The Daily Yomiuri reports today that a tax credit enacted in 2003 will expire at the end of the fiscal year, as originally planned. 10 percent of the purchase price of "IT devices" (from PCs to fax machines; not including software?) is deducted directly directly from corporate tax. In fiscal 2005, companies will save about 510 billion yen (almost five billion U.S. dollars). (I have lots of questions about the aims and success of this measure, especially regarding software, but that's not the point of this posting.)

In its place, the government is considering enacting a package to improve the international competitiveness of companies. One provision may be a similar deduction of "10 percent of the retail price of database management software and other devices aimed at preventing illegal computer access, the use of spyware and information leak among other incidents."

That's a noble but vague goal; I could argue that practically anything was bought to further my cybersecurity. Moreover, security is more about practices than purchases.

I also wonder if companies that create cybersecurity problems a la Sony will have their taxes raised?

In its place, the government is considering enacting a package to improve the international competitiveness of companies. One provision may be a similar deduction of "10 percent of the retail price of database management software and other devices aimed at preventing illegal computer access, the use of spyware and information leak among other incidents."

That's a noble but vague goal; I could argue that practically anything was bought to further my cybersecurity. Moreover, security is more about practices than purchases.

I also wonder if companies that create cybersecurity problems a la Sony will have their taxes raised?

Tuesday, November 22, 2005

Shinkansen in China

The Yoimuri Shimbun is reporting today that China will buy sixty Hayate shinkansen (bullet trains) from a consortium of Japanese companies for its planned 12,000km network of high-speed trains. Apparently they will also buy ICE trains from Germany, but not TGV trains from France. No idea how they plan to apportion the different technologies; by region?

Hayate trains currently run at up to 275kph, though JR East is said to be working on speeds up to 360. The Chinese network is proposed to run at 300.

The report says the network will cost more than ten trillion yen (about 80 billion dollars), but doesn't say how many years it will take to do the whole buildout (though some services may start as early as 2008), nor what fraction of that money will be spent on Japanese-made rolling stock. Nikkei says the Hayate trains cost 250 million yen ($2M) per car, and I think those trains are typically ten cars, times sixty trains, that's $1.2 billion dollars.

And in America we have only the Acela, and only in a small area.

Hayate trains currently run at up to 275kph, though JR East is said to be working on speeds up to 360. The Chinese network is proposed to run at 300.

The report says the network will cost more than ten trillion yen (about 80 billion dollars), but doesn't say how many years it will take to do the whole buildout (though some services may start as early as 2008), nor what fraction of that money will be spent on Japanese-made rolling stock. Nikkei says the Hayate trains cost 250 million yen ($2M) per car, and I think those trains are typically ten cars, times sixty trains, that's $1.2 billion dollars.

And in America we have only the Acela, and only in a small area.

How Many Qubits Can Dance on the Head of a Pin?

[Third installment in examining the meaning of scaling a quantum computer. First is here, second one is here. Continued in installment four, here]

I have the cart slightly before the horse in my breadbox posting. In that posting, we were talking about a multi-node quantum computer and its relationship to scalability. But before getting to my quantum multicomputer, we should look at the scalability of a single, large, monolithic machine.

Again, let's consider VLSI-based qubits. In particular, let's look at the superconducting Josephson-junction flux qubit from Dr. Semba's group at NTT (one good paper on it is this one). Their qubit is a loop about 10 microns square. This area is determined by physics, not limited by VLSI feature size; the size of the loop determines the size of the flux quantum, which in turn determines control frequencies, gate speed, and whatnot. (The phase qubit from NIST is more like 100 microns on a side; it's big enough to see!) Semba-san's group is working hard on connecting qubits via an LC oscillator. The paper cited above shows that one qubit couples to the bus; the next step might be two qubits.

So, once they've achieved that, is it good enough? Do they then meet DiVincenzo's criterion for a scalable set of qubits? Let's see. As an engineer, I don't care if the technology scales indefinitely, I care if it scales through regions that solve interesting problems. In this case, we're looking for up to a quarter of a million physical qubits. Can we fit them on a chip? At first glance, it would seem so. Even a small 10mm square chip would fit a million 10-micron square structures. But that ignores I/O pads. Equally important, a glance at the micrograph in the paper above shows that the capacitor is huge compared to the qubit (but you only need one of those per bus that connects a modest-sized group of qubits, and it may be possible to build the capacitor in some more space-efficient manner, or maybe even put it off-chip?). Still more important, these qubits are magnetic, not charge; place them too close together, and they'll interfere. I have no idea what the spacing is (though I assume Semba-san and the others working on this type of qubit have at least thought about this, and may even have published some numbers in a paper I haven't read or don't recall). Control is achieved with a microwave line run past the qubit, so obviously you can't run that too close to other qubits. All of this is a long-winded way of saying there's a lot of physics to be done even before the mundane engineering of floor-planning. We talked a little in the last installment about the need for I/O pads and control lines into/out of the dil fridge. That applies directly to the chip, as well; now we need roughly a pin per qubit, unless a lot more of the signal generators and whatnot can be built directly into our device. Without some pretty major advances in integration, I'll be surprised if you can get more than a thousand qubits into a chip (whether they're charge, spin, flux, what have you). So does that mean we're dead? We can't reach a quarter of a million qubits?

The basic idea of the quantum multicomputer is that we can connect multiple nodes together. We need to create an entangled state that crosses node boundaries, so we need quantum I/O. So, you need the ability to entangle your stationary qubit with something that moves - preferably a photon, though an electron isn't out of the question; a strong laser probe beam may work, too. Then you need a way to get the information out of that photon back into another qubit at the destination node. This is going to be hard, but the alternative is a quantum computer that doesn't extend outside the confines of a single chip. This idea of distributed quantum computing goes back a decade, and there have been some good experiments in this area; I'll post some links references another time.

Bottom line: for the foreseeable future, scalability requires I/O - classical for sure, and maybe quantum. More than a matter of die space for the qubits themselves, I/O pads and lines and space for them on-chip, plus physical interference effects, will drive how many qubits you can fit on a chip. If that number doesn't scale up to the point where it is not the limiting factor in the size of system you can build, then you have to have quantum I/O (some way to entangle on-chip qubits with qubits in another device or another technology).

[Update: Semba-san tells me that the strength of the interaction could be a problem if the qubits are only a micron apart, but at 10um spacing, the interaction drops to order of kHz, low enough not to worry about much.]

I have the cart slightly before the horse in my breadbox posting. In that posting, we were talking about a multi-node quantum computer and its relationship to scalability. But before getting to my quantum multicomputer, we should look at the scalability of a single, large, monolithic machine.

Again, let's consider VLSI-based qubits. In particular, let's look at the superconducting Josephson-junction flux qubit from Dr. Semba's group at NTT (one good paper on it is this one). Their qubit is a loop about 10 microns square. This area is determined by physics, not limited by VLSI feature size; the size of the loop determines the size of the flux quantum, which in turn determines control frequencies, gate speed, and whatnot. (The phase qubit from NIST is more like 100 microns on a side; it's big enough to see!) Semba-san's group is working hard on connecting qubits via an LC oscillator. The paper cited above shows that one qubit couples to the bus; the next step might be two qubits.

So, once they've achieved that, is it good enough? Do they then meet DiVincenzo's criterion for a scalable set of qubits? Let's see. As an engineer, I don't care if the technology scales indefinitely, I care if it scales through regions that solve interesting problems. In this case, we're looking for up to a quarter of a million physical qubits. Can we fit them on a chip? At first glance, it would seem so. Even a small 10mm square chip would fit a million 10-micron square structures. But that ignores I/O pads. Equally important, a glance at the micrograph in the paper above shows that the capacitor is huge compared to the qubit (but you only need one of those per bus that connects a modest-sized group of qubits, and it may be possible to build the capacitor in some more space-efficient manner, or maybe even put it off-chip?). Still more important, these qubits are magnetic, not charge; place them too close together, and they'll interfere. I have no idea what the spacing is (though I assume Semba-san and the others working on this type of qubit have at least thought about this, and may even have published some numbers in a paper I haven't read or don't recall). Control is achieved with a microwave line run past the qubit, so obviously you can't run that too close to other qubits. All of this is a long-winded way of saying there's a lot of physics to be done even before the mundane engineering of floor-planning. We talked a little in the last installment about the need for I/O pads and control lines into/out of the dil fridge. That applies directly to the chip, as well; now we need roughly a pin per qubit, unless a lot more of the signal generators and whatnot can be built directly into our device. Without some pretty major advances in integration, I'll be surprised if you can get more than a thousand qubits into a chip (whether they're charge, spin, flux, what have you). So does that mean we're dead? We can't reach a quarter of a million qubits?

The basic idea of the quantum multicomputer is that we can connect multiple nodes together. We need to create an entangled state that crosses node boundaries, so we need quantum I/O. So, you need the ability to entangle your stationary qubit with something that moves - preferably a photon, though an electron isn't out of the question; a strong laser probe beam may work, too. Then you need a way to get the information out of that photon back into another qubit at the destination node. This is going to be hard, but the alternative is a quantum computer that doesn't extend outside the confines of a single chip. This idea of distributed quantum computing goes back a decade, and there have been some good experiments in this area; I'll post some links references another time.

Bottom line: for the foreseeable future, scalability requires I/O - classical for sure, and maybe quantum. More than a matter of die space for the qubits themselves, I/O pads and lines and space for them on-chip, plus physical interference effects, will drive how many qubits you can fit on a chip. If that number doesn't scale up to the point where it is not the limiting factor in the size of system you can build, then you have to have quantum I/O (some way to entangle on-chip qubits with qubits in another device or another technology).

[Update: Semba-san tells me that the strength of the interaction could be a problem if the qubits are only a micron apart, but at 10um spacing, the interaction drops to order of kHz, low enough not to worry about much.]

Sunday, November 20, 2005

Joint Chip Plant in Japan in 2007?

The Yomiuri Shimbun is reporting that five Japanese semiconductor makers, Hitachi, Toshiba, Matsushita Electric Industrial, NEC Electronics, and Renesas Technology, are planning a 65-nanometer wire width plant. It will be the first time Japanese manufacturers have done a joint chip plant.

Construction is expected to start next year, production in 2007 "at the earliest". Cost might be 200 billion yen (almost two billion U.S. dollars); the share to be paid by each company hasn't been determined yet. The article says it's necessary to keep the companies competitive in the face of "huge capital investments" by South Korean and U.S. manufacturers. I don't think they've picked a location yet.

Construction is expected to start next year, production in 2007 "at the earliest". Cost might be 200 billion yen (almost two billion U.S. dollars); the share to be paid by each company hasn't been determined yet. The article says it's necessary to keep the companies competitive in the face of "huge capital investments" by South Korean and U.S. manufacturers. I don't think they've picked a location yet.

Blogalog: Computer Graphics in Japan?

A pure computer graphics question, the first in a while. I know SIGGRAPH was months ago, but I never got around to asking some of my questions.

Was there any CG art (still or animated) from Japan this year? Who, from what organization? (Got a link?)

Any good technical papers from Japan, or exciting new technology from Japan on the show floor?

I know there are a lot of CG artists in Japan, and I'm not in that circle. It would be fun to get hooked up with them and at least attend the occasional talk or art show.

Was there any CG art (still or animated) from Japan this year? Who, from what organization? (Got a link?)

Any good technical papers from Japan, or exciting new technology from Japan on the show floor?

I know there are a lot of CG artists in Japan, and I'm not in that circle. It would be fun to get hooked up with them and at least attend the occasional talk or art show.

Thursday, November 17, 2005

Warp Drive Patented!

Okay, I know I'm blogging a bunch this week, and this seems more like a Friday afternoon thing than a real workday item, but I can't resist this one. Courtesy of Dave Farber's Interesting People list, we have the discovery of a fascinating patent issued by the USPTO on Nov. 1, 2005 to Boris Volfson.

Topic of patent #6,960,975? "Space Vehicle Propelled by the Pressure of Inflationary Vacuum State". A warp drive. Use 10,000 Tesla magnets and a 5meter shell of superconducting material, and you, too, can warp space and time, and travel at "a speed possibly approaching a local light-speed, the local light-speed which may be substantially higher than the light-speed in the ambient space"!

I wonder if this is the first patent for H.G. Wells' cavorite, the anti-gravity material in his novel The First Men in the Moon? (Volfson calls out the comparison directly.)

PDF and HTML versions of the patent available.

Topic of patent #6,960,975? "Space Vehicle Propelled by the Pressure of Inflationary Vacuum State". A warp drive. Use 10,000 Tesla magnets and a 5meter shell of superconducting material, and you, too, can warp space and time, and travel at "a speed possibly approaching a local light-speed, the local light-speed which may be substantially higher than the light-speed in the ambient space"!

I wonder if this is the first patent for H.G. Wells' cavorite, the anti-gravity material in his novel The First Men in the Moon? (Volfson calls out the comparison directly.)

PDF and HTML versions of the patent available.

Congratulations, Yoshi!

Yoshihisa Yamamoto, professor at Stanford and NII and world-renowned expert on quantum optics and various forms of quantum computing technology, received the Medal of Honor with Purple Ribbon yesterday, apparently from the emperor himself. Quite an honor, especially as Yoshi is among the younger recipients.

This is Getting Monotonous...

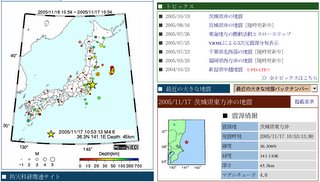

Another 4.8 off the coast of Ibaraki-ken. Figure this time is from Hi-net. The star is the most recent one, the rest are all activity in the last 24 hours. In the right-hand map, we live near the border between the two prefectures in the lower right, almost due north of the easternmost edge of Tokyo Bay. Shaking this time was pretty mild, you definitely wouldn't notice riding in a car or train and might not have even walking.

First Frost in Abiko and W in Kyoto

There's frost on the ground here in Abiko today, and even at 7:30 a.m. Narita Airport is reporting 2C as the temperature. The tree leaves this year are not very pretty, thanks to a sullen but not crisp October. Mikan and the many varieties of mushrooms are in season; I had a Chinese dish for lunch yesterday with maitake, which was a bit surprising. Fall is in full swing.

President Bush is Kyoto, visiting with Prime Minister Koizumi. Laura tried her hand at Japanese calligraphy, and the photo in the paper this morning looks like she caught on quickly.

There was a column in yesterday's Daily Yomiuri by a guy from the Heritage Foundation, who called the state of U.S.-Japan ties the best they've been in fifty years. That's pure spin, but it is true that they're pretty good right now. Bush and Koizumi seem to honestly like each other; their personalities are both swaggering cowboy. Except for beef, trade friction is low. Both are worried about the rise of China, both economically and militarily (environmentally should be a concern, too). Condi and Aso are in Pusan, working on North Korea. We're finishing up a reworking of the security deal. But, most important, Bush and Koizumi need each other. Japan is one of the biggest coalition partners in Iraq. Japan needs U.S. support for the permanent U.N. Security Council seat it so desparately wants.

It's not all smooth; Okinawa has an ambivalent relationship with the U.S. military, and the presence there is getting shuffled. The Okinawa governor took exception to Koizumi's remark that Japan must pay a "certain cost" to enjoy the security brought by the alliance. He correctly interprets that cost as Okinawa continuing to put up with our military.

President Bush is Kyoto, visiting with Prime Minister Koizumi. Laura tried her hand at Japanese calligraphy, and the photo in the paper this morning looks like she caught on quickly.

There was a column in yesterday's Daily Yomiuri by a guy from the Heritage Foundation, who called the state of U.S.-Japan ties the best they've been in fifty years. That's pure spin, but it is true that they're pretty good right now. Bush and Koizumi seem to honestly like each other; their personalities are both swaggering cowboy. Except for beef, trade friction is low. Both are worried about the rise of China, both economically and militarily (environmentally should be a concern, too). Condi and Aso are in Pusan, working on North Korea. We're finishing up a reworking of the security deal. But, most important, Bush and Koizumi need each other. Japan is one of the biggest coalition partners in Iraq. Japan needs U.S. support for the permanent U.N. Security Council seat it so desparately wants.

It's not all smooth; Okinawa has an ambivalent relationship with the U.S. military, and the presence there is getting shuffled. The Okinawa governor took exception to Koizumi's remark that Japan must pay a "certain cost" to enjoy the security brought by the alliance. He correctly interprets that cost as Okinawa continuing to put up with our military.

Wednesday, November 16, 2005

Magnitude 4.8 Wakeup Call

Am I a prophet, or what? (Mostly what, but I'm working on the prophet thang in case the academic gig falls through.)

At 6:18 this morning, magnitude 4.8 earthquake off the coast of Ibaraki-ken, 40km down. Here in Abiko, it was about shindo 2. Woke me up, sort of (usually I'm up before 6:00, but I worked until 1:00 last night). Not enough to get the adrenalin going, so I just rolled over and went back to sleep. My mother-in-law, who was walking between her house and ours, didn't even feel it.

This is at least the third from the same area and roughly the same size in the last month, and it seems like there has been one about every two weeks since we moved to Abiko in March. As long as it's releasing stress instead of building up, I'm happy.

Monday, November 14, 2005

3D Earthquake Info

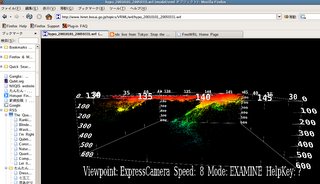

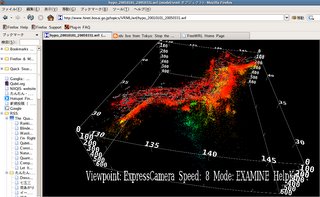

While digging around for the last blog entry, I found a 3D VRML dataset of a year's worth of earthquakes under Japan. A couple of screen shots are above. You can see the subduction zone at the eastern edge of the isles extraordinarily clearly.

I'm using Firefox on Fedora Core 4 Linux. The FreeWRL FC3 RPM installed cleanly and works pretty well for VRML, but doesn't seem to do X3D properly. Be warned, this might take a couple of minutes to load; be patient.

The links:

This is a great use of 3D graphics.

Earthquake Info on Japan

My grandmother, whose ninetieth birthday is coming up soon, watches TV a lot, and calls my mom whenever there's an earthquake reported in Japan.

When there is an earthquake here, I check with tenki.jp, which is the government weather agency's website. Just above and to the right of the map is a pull-down menu of recent quake dates and times. The color-coded dots are the Japanese subjective shaking scale, which goes something like this:

- shindo 1: felt by sensitive people

- shindo 2: felt by many people

- shindo 3: wakeup call!

- shindo 4: pick up items previously precariously perched at higher levels

- shindo 5: call a handyman

- shindo 6: call a contractor

- shindo 7: call the army

Note that, although the numbers are similar, this is not the same as the magnitude of the earthquake; these numbers are what a person feels locally.

That info is filtered and takes a few (6-10) minutes to get posted. Hi-net is the direct access to the seismographic network.

Unfortunately, neither of those seems to have a decent English presence. I like tenki.jp because it has an i-mode interface, so when a quake hits in the middle of the night, I roll over, check my cell phone, then go back to sleep.

It does seem that we are having a lot of magnitude 4.0-5.3-ish quakes lately, mostly just north of us in Ibaraki-ken.

Sunday, November 13, 2005

Bigger Than a Breadbox, Smaller than a Football Stadium

[Second in the "practicalities of scalable quantum computers" series. The first installment was Forty Bucks a Qubit. Third is How Many Qubits Can Dance on the Head of a Pin?]

After talking to various people, I'm raising the target price on my quantum computer to a hundred million dollars. The U.S. government clearly spends that much on cluster supercomputers, though I don't think that they reached that level in the heyday of vector machines. BlueGene, for example, built by IBM, has 131,072 processors (65536 dual-core), and there's no way you're going to build a system like that, counting packaging, power, memory, storage, and networking, for less than a grand a processor (all these prices are ignoring physical plant, including the building).

Let's talk about packaging, cooling, and housing a semiconductor-based quantum computer. Even though we're talking about manipulating individual quanta, the space, power, thermal, and helium budgets for this are large. Helium? Yeah, helium. Both superconducting qubits and quantum dot qubits are operated at millikelvin temperatures, and the way you get there is by using a dilution refrigerator.

A dilution refrigerator, or dil fridge, uses the different condensation characteristics of helium-3 and helium-4 to cool things down to millikelvin temperatures. See the web pages here, here, and here, and the excellent PDF of the Hitchhiker's Guide to the Dilution Refrigerator from Charlie Marcus' lab at Harvard. All of the dil fridges I have ever seen are from Oxford Instruments.

Although there are a bunch of models, all the ones I've seen are almost two meters tall and a little under a meter in diameter, and you load your test sample in from the top on a long insert, so you need another two meters' clearance above (plus a small winch). A dil fridge is limited in theory to something like 7 millikelvin, and in practice to higher values depending on model. They can typically extract only a few hundred microwatts of heat from the device under test, which is limited to a few cubic centimeters. You're also going to need lots of clearance around the fridge, for operators, rack-mount equipment, and space to move equipment down the aisles. All this is by way of saying that you need quite a bit of space, power, and money for each setup. Let's call such a setup a "pod".

I'm considering the design of a quantum multicomputer, a set of quantum computing nodes interconnected via a quantum network. Let's start with one node per pod, and again set our target at a machine for factoring a 1,024-bit number. If we put, say, five application-level qubits per node, and add two levels of Steane [[7,1,3]] code, we've got 250 physical qubits per node, and 1,024 nodes in 1,024 pods. If each pod requires an area three meters square, we need an area about 100 meters by 100 meters for our total machine (bigger than a football field, smaller than a football stadium).

The thermal engineering is a serious problem. The electronics for each qubit currently fill a 19-inch rack, but a lot of that is measurement, not control, and of course in a production-engineered system we can increase the integration. It's still going to be a lot of equipmentfor 250 qubits, and worse, a lot more heat. It seems unlikely that our thermal budget will allow us to put the control electronics in the dil fridge (and some of it may not operate properly at such low temperatures, anyway). So, we're going to have to control it from outside. That means lots of signal lines - one per qubit for control, plus quite a few more (maybe as many as one per qubit) for measurement and various other things. Probably 4-500 microcoaxes that have to reach inside the fridge, each one carrying heat with it. Yow. Unless you substantially raise the extraction rate of the dil fridge, each line and measurement device is limited to about a microwatt - plus the heat that will leak through the fridge body itself. In the end it's almost certainly a multi-stage system, with parts running at room temperature, parts at liquid helium temps, and only the minimum necessary in the dil fridge itself.

Finally, we have to think about money again. We've just set a limit of about $100K per pod. I've never priced dil fridges, but I'd be surprised if they're cheaper than that (I wonder what kind of volume discount you get when you offer to buy a thousand dil fridges...), and we have a bunch of electronics and whatnot to pay for, in addition to the quantum computing device itself! We can do better on floor space and money if we can fit more than one node per pod, but doubling or quadrupling the number of coaxes and the heat budget is a daunting proposition on an already extremely aggressive engineering challenge.

It's not that I think this linear extrapolation from our current state is necessarily the way production systems will really be built (it's also worth noting that NMR, ion trap, optical lattice, and atom chip systems would require a completely different analysis). I'm trying to create a rough idea of the constraints we face and the problems that must be solved. One thing's for sure, though: when I found my quantum computing startup, world-class thermal and packaging engineers will be just as high on my shopping list as the actual quantum physicists.

After talking to various people, I'm raising the target price on my quantum computer to a hundred million dollars. The U.S. government clearly spends that much on cluster supercomputers, though I don't think that they reached that level in the heyday of vector machines. BlueGene, for example, built by IBM, has 131,072 processors (65536 dual-core), and there's no way you're going to build a system like that, counting packaging, power, memory, storage, and networking, for less than a grand a processor (all these prices are ignoring physical plant, including the building).

Let's talk about packaging, cooling, and housing a semiconductor-based quantum computer. Even though we're talking about manipulating individual quanta, the space, power, thermal, and helium budgets for this are large. Helium? Yeah, helium. Both superconducting qubits and quantum dot qubits are operated at millikelvin temperatures, and the way you get there is by using a dilution refrigerator.

A dilution refrigerator, or dil fridge, uses the different condensation characteristics of helium-3 and helium-4 to cool things down to millikelvin temperatures. See the web pages here, here, and here, and the excellent PDF of the Hitchhiker's Guide to the Dilution Refrigerator from Charlie Marcus' lab at Harvard. All of the dil fridges I have ever seen are from Oxford Instruments.

Although there are a bunch of models, all the ones I've seen are almost two meters tall and a little under a meter in diameter, and you load your test sample in from the top on a long insert, so you need another two meters' clearance above (plus a small winch). A dil fridge is limited in theory to something like 7 millikelvin, and in practice to higher values depending on model. They can typically extract only a few hundred microwatts of heat from the device under test, which is limited to a few cubic centimeters. You're also going to need lots of clearance around the fridge, for operators, rack-mount equipment, and space to move equipment down the aisles. All this is by way of saying that you need quite a bit of space, power, and money for each setup. Let's call such a setup a "pod".

I'm considering the design of a quantum multicomputer, a set of quantum computing nodes interconnected via a quantum network. Let's start with one node per pod, and again set our target at a machine for factoring a 1,024-bit number. If we put, say, five application-level qubits per node, and add two levels of Steane [[7,1,3]] code, we've got 250 physical qubits per node, and 1,024 nodes in 1,024 pods. If each pod requires an area three meters square, we need an area about 100 meters by 100 meters for our total machine (bigger than a football field, smaller than a football stadium).

The thermal engineering is a serious problem. The electronics for each qubit currently fill a 19-inch rack, but a lot of that is measurement, not control, and of course in a production-engineered system we can increase the integration. It's still going to be a lot of equipmentfor 250 qubits, and worse, a lot more heat. It seems unlikely that our thermal budget will allow us to put the control electronics in the dil fridge (and some of it may not operate properly at such low temperatures, anyway). So, we're going to have to control it from outside. That means lots of signal lines - one per qubit for control, plus quite a few more (maybe as many as one per qubit) for measurement and various other things. Probably 4-500 microcoaxes that have to reach inside the fridge, each one carrying heat with it. Yow. Unless you substantially raise the extraction rate of the dil fridge, each line and measurement device is limited to about a microwatt - plus the heat that will leak through the fridge body itself. In the end it's almost certainly a multi-stage system, with parts running at room temperature, parts at liquid helium temps, and only the minimum necessary in the dil fridge itself.

Finally, we have to think about money again. We've just set a limit of about $100K per pod. I've never priced dil fridges, but I'd be surprised if they're cheaper than that (I wonder what kind of volume discount you get when you offer to buy a thousand dil fridges...), and we have a bunch of electronics and whatnot to pay for, in addition to the quantum computing device itself! We can do better on floor space and money if we can fit more than one node per pod, but doubling or quadrupling the number of coaxes and the heat budget is a daunting proposition on an already extremely aggressive engineering challenge.

It's not that I think this linear extrapolation from our current state is necessarily the way production systems will really be built (it's also worth noting that NMR, ion trap, optical lattice, and atom chip systems would require a completely different analysis). I'm trying to create a rough idea of the constraints we face and the problems that must be solved. One thing's for sure, though: when I found my quantum computing startup, world-class thermal and packaging engineers will be just as high on my shopping list as the actual quantum physicists.

Thursday, November 10, 2005

NEC's Quantum Telephone

This posting is offline for the moment. Please send me email if you have questions or concerns.

[I have other postings on QKD at Stop the Myth and More on the Myth.]

[I have other postings on QKD at Stop the Myth and More on the Myth.]

Thursday, November 03, 2005

Forty Bucks a Qubit

First in a series of thoughts on what it means to build a scalable quantum computer... [Second installment is Bigger Than a Breadbox, Smaller Than a Football Stadium.]

My guess on the price at which the first production quantum computer is sold: forty bucks a qubit. Of course, the definition of "production" is fuzzy, but I mean a machine that is bought and installed for the purpose of solving real problems, not just a "let's support research" buy from the U.S. government. That is, it has to solve a problem that there's not a comparable classical solution for.

This guess is based on the assumption that the machine will be built to run Shor's factoring algorithm on a 1,024-bit number, which is sort of a questionable assumption, but we'll go with it (some physicists want them for direct simulation of complex Hamiltonians, but I don't understand that well enough to make any predictions there at all). That takes about five kilobits of application-level qubit space, and we'll multiply by fifty to support two levels of QEC. The [[7,1,3]] Steane code twice would be 49, but codes in production use are likely to be somewhat more efficient (maybe as good as 3:1, but I doubt much better), but there will no doubt be a need for additional space I didn't account for above, so we'll guess a quarter of a million physical qubits.

If you assume ten million U.S. dollars is a reasonable price for a machine with unique capabilities, that gives you forty bucks a qubit. I could easily be off by an order of magnitude in either direction, but I'll be very surprised if it's over a thousand dollars or under a buck.

Anyway, that's my prediction. What do you think? High? Low?

Next questions: what technology, and when?

I should put up a prediction at LongBets.org...

My guess on the price at which the first production quantum computer is sold: forty bucks a qubit. Of course, the definition of "production" is fuzzy, but I mean a machine that is bought and installed for the purpose of solving real problems, not just a "let's support research" buy from the U.S. government. That is, it has to solve a problem that there's not a comparable classical solution for.